18. Clustering algorithms#

We will look at developing skills to detect clusters in your data. Identifying clusters can be very helpful when exploring your data, and is a form of unsupervised machine learning.

To perform clustering we will make use of the sklearn library. This library is a very useful machine learning library in python. We’ll use this library a lot in this course!

There is no single best clustering algorithm, as it will often depend on the data you have and the question you’d like to address. In this exercise we will look at two good clustering algorithms.

K-means

Hdbscan

Load python libraries

import numpy as np

import pandas as pd

import seaborn as sns

import sklearn as sk

from sklearn import cluster

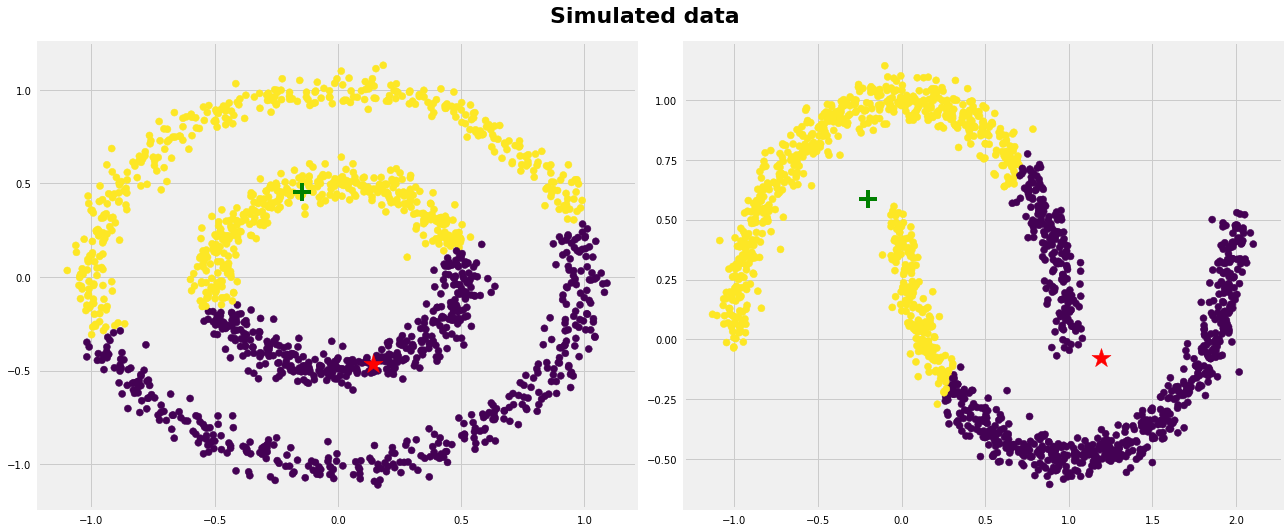

18.1. Simulating data#

Simulating data is a great way to test out different machine learning algorithms. Here we’ll use the numpy library to create some random numbers.

#simulate 100 values from a normal distribution with a mean of 1 and an sd of 4

x = np.random.normal(1,4, size = 10)

#take a look

x

Let’s simulate some data where we know how many clusters there are.

First let’s create 1000 points and set them to class 1. Each point will get a random x and y coordinate.

#simulate some random values

array_class1 = {"x":np.random.normal(1,4, size=1000),

"y":np.random.normal(1,4, size=1000),

"class": 1}

#put them in a dataframe

df_class1 = pd.DataFrame(data=array_class1)

#plot it

sns.scatterplot(data=df_class1, x="x",y="y")

Create another set of 1000 points and assign them to class 2. Then we’ll add the two sets of points together by using concat:

#generate some random values

array_class2 = {"x":np.random.normal(8,5, size=1000),

"y":np.random.normal(8,1, size=1000),

"class": 2}

#put them in a dataframe

df_class2 = pd.DataFrame(data=array_class2)

#bind the two dataframes together by rows

df_class = pd.concat([df_class1,df_class2], axis = 0) #axis=0 just says to bind by rows, axis=1 would be by columns

#plot it

sns.scatterplot(data=df_class, x="x",y="y", hue='class')

18.2. K-Means#

Let’s try out the first classification algorithm: k-means.

Sklearn uses a standard approach to machine learning models. We’ll go through each step with k-means.

First let’s build the machine learning algorithm that we will use (i.e., k-means)

#initalize the kmeans algorithm

clus_kmeans = cluster.KMeans(n_clusters=2)

Second let’s fit the model using data

#fit the model

clus_kmeans.fit(df_class[['x','y']] )

Third, now that the model is built and fit to data we can use it to make predictions!

#make some predictions

df_class['pred_kmeans'] = clus_kmeans.fit_predict(df_class[['x','y']] )

#take a look

df_class

Finally, we can visualize the predictions it made by using seaborn

#plot it!

sns.scatterplot(data=df_class,x="x",y="y",hue="pred_kmeans")

This is what is considered as a hard clustering algorithm - it assigns every point to a cluster. It also makes some assumptions about clusters:

You know in advance how many clusters there are

Clusters are roughly oval shaped (normally distributed along each dimension)

Go back and try shifting the mean and standard deviation of the simulated clusters and see how well k-means does. Try changing the number of clusters k-means looks for. When does it break down?

When this book is used within a class setting there are often good points to break up long sections of coding exercises. We’ll use this stop sign (below) to indicate when we will take a break from coding and discuss more theoretical issues.

18.3. Tuning machine learning algorithms#

The elbow method can be used to help choose how many clusters are likely in the data. It also introduces us to how tune machine learning algorithms by running the model using different parameters and monitoring how well the algorithm performs.

First let’s measure how successfull the clustering algorithm is. There are many ways to measure success in clustering, here we will use mean silhouette distance which describes how far apart the points within clusters are from each other.

#Mean silhouette distance

sk.metrics.silhouette_score(X=df_class.iloc[:,0:2],labels=df_class['pred_kmeans'])

Try the model above with a different number of clusters and see how this mean silhouette value changes. At what number of clusters is the mean silhouette distance the largest (large means more seperation between clusters).

e.g., is the true number of clusters the highest?

18.4. Make our own functions#

Note: here we are changing a parameter and re-running the code. In cases like these it can be sometimes useful to create our own functions. Let’s take a look at how to create a function in python.

#here is a simple function

def run_k_means(numb):

return numb

Now that we’ve created it let’s use it

run_k_means(2)

This function just takes one input (numb) and then prints that number.

Let’s add each of the model steps above inside the function, with the goal of printing the average silhouette distance.

def run_k_means(numb):

#1. initalize the ml algorithm

clus_kmeans = cluster.KMeans(n_clusters=numb)

#2. fit the ml algorithm

clus_kmeans.fit(df_class[['x','y']] )

#3. make some predictions

df_class['pred_kmeans'] = clus_kmeans.fit_predict(df_class[['x','y']] )

#4. measure performance

avg_sil = sk.metrics.silhouette_score(X=df_class.iloc[:,0:2],labels=df_class['pred_kmeans'])

return avg_sil

Notice here that the input (numb) is now used to build a kmeans model with n_clusters = numb

Let’s use this new function and see how it works!

run_k_means(4)

Is it much easier to figure out what number of clusters results in the highest average silhouette score?

18.5. Using loops#

One last coding trick we’ll learn today, is how to use a loop to make this even easier.

Let’s first look at how loops work in python

for i in range(2,10):

print(i)

Now that we know the basic structure of a loop, and what it can do, let’s use it to help figure out what number of clusters is optimal!

for i in range(2,10):

print(run_k_means(i))

Let’s plot this out! To store the silhouette values as we run the loop, we’ll create an empty list (avg_sil) and each run in the loop we’ll add the average silhouette value to the list using append.

avg_sil = []

for i in range(2,10):

avg_sil.append(run_k_means(i))

Now that we have a list of average silhouette values, let’s place these values in a dataframe and plot them using seaborn.

#create a dictionary

dict_sil = {'sil':avg_sil, 'clusters':list(range(2,10)) }

#convert the dictionary into a dataframe

df_sil = pd.DataFrame(dict_sil)

#plot

sns.relplot(x='clusters', y='sil', data=df_sil, kind="line")

18.6. HDBscan#

Ok let’s see how another algorithm does: hdbscan. This method is newer than k-means and focuses on the finding high density regions surrounded by low density.

To run this clustering algorithm we’ll need to import it just like any other library.

import hdbscan

Now we can use the hdbscan clustering method, it will follow the same steps as sklearn.

First initalize the ml algorithm

#initialize the kmeans algorithm (hyperparameter - choose minimum cluster size)

clus_hdbscan = hdbscan.HDBSCAN(min_cluster_size = 30)

Second fit the ml algorithm to the data

#fit the model

clus_hdbscan.fit(df_class[['x','y']] )

Third use the ml algorithm to make some predictions

#make some predictions

df_class['pred_khdbscan'] = clus_hdbscan.fit_predict(df_class[['x','y']] )

Finally, visualize the predictions of the ml algorithm

#plot it!

sns.scatterplot(data=df_class, x="x",y="y", hue='pred_khdbscan')

Notice the negative class? This is HDBscans way of saying that it isn’t sure about those points. It is a soft clustering algorithm in that it will give you the probability of each point belonging to a class.

We can now measure the mean silhouette distance for HDBscan clustering.

#estimate silhouette score for k-means

sk.metrics.silhouette_score(X=df_class.loc[:,['x','y']], labels=df_class['pred_khdbscan'])

#get only those points that are given a cluster

df_class_sub = df_class[df_class.pred_khdbscan > -1]

#estimate silhouette score for k-means

sk.metrics.silhouette_score(X=df_class_sub.loc[:,['x','y']],labels=df_class_sub['pred_khdbscan'])

How does it do compared to k-means? Does that compare with what you see visually?

18.7. Make our own functions#

Let’s try to make another function just like the one we did for the k-means algorithm.

feel free to copy and paste the steps from above remember to think carefully about where the input minNumb will go in the code.

def run_HDBScan(minNumb):

#1. initalize the ml algorithm

clus_hdbscan = hdbscan.HDBSCAN(min_cluster_size = ?)

#2. fit the ml algorithm

clus_hdbscan.?

#3. make some predictions

df_class['pred_khdbscan'] = clus_hdbscan.?

#4. measure performance

avg_sil = sk.metrics.silhouette_score(?)

return avg_sil

Try out you new function and see if it works!

#run your function with 500 as the minimum number of points

run_HDBScan(40)

18.8. Running loops#

Let’s try and use a loop to try out a bunch of different values for the minimum number of points parameter.

the range() function follows range(from,to,by) format. e.g., range(10,70,10) = 10, 20, 30, 40, 50, 60

for ? in range(?,?,?):

print(run_HDBScan(?))

18.9. Bonus#

If all is going well, you might want to try to plot the results!

You can follow along with how we ploted the results from the k-means.

avg_sil_hd = []

for ? in range(?):

avg_sil_hd.?

#create a dictionary

dict_sil = ?

#convert the dictionary into a dataframe

df_sil_hd = ?

#plot

sns.relplot(?)

Can you find an optimum value for min_cluster_size? If you go back and run the HDBScan algorithm with the optimal min_cluster_size do you see large differences in how it clusters the data?

Finally, what happens if you run your function for large min_cluster sizes? How large can you go before your code fails, and why do you think it failed?

18.10. Further reading#

A more detailed look at HDBScan.

A comparison between a number of different clustering algorithms.

If you would like the notebook without missing code check out the full code version.